The example above is unlikely to be applicable to any real work.

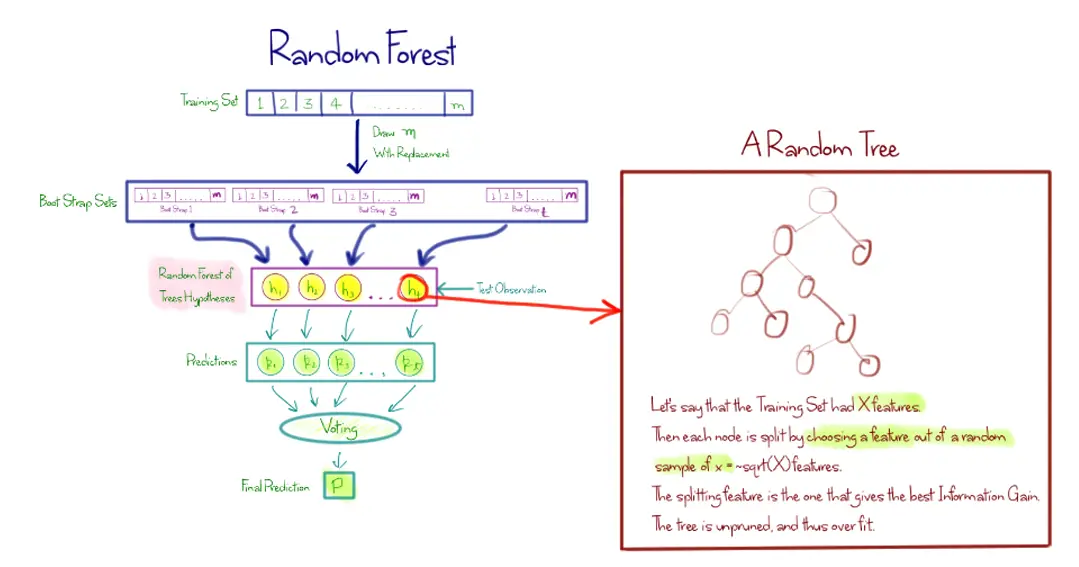

Algorithm 15.1 Random Forest for Regression. If you have a large dataset, you would generate bootstrap samples of a much smaller size. Trees are ideal candidates for bagging, since they can capture complex interaction. Dropping even a small part of training data leads to constructing substantially different base classifiers. Remember that we have already proved this theoretically.īagging is effective on small datasets. For this, we will use an example from sklearn’s documentation. Let’s examine how bagging works in practice and compare it with a decision tree. You can use most of the algorithms as a base. The scikit-learn library supports bagging with meta-estimators BaggingRegressor and BaggingClassifier. Additionally, outliers are likely omitted in some of the training bootstrap samples.

The efficiency of bagging comes from the fact that the individual models are quite different due to the different training data and their errors cancel each other out during voting. In other words, bagging prevents overfitting. \(\DeclareMathOperator\right) + \sigma^2īagging reduces the variance of a classifier by decreasing the difference in error when we train the model on different datasets.

Bagging random forest free#

Free use is permitted for any non-commercial purpose. This material is subject to the terms and conditions of the Creative Commons CC BY-NC-SA 4.0 license. Translated and edited by Christina Butsko, Egor Polusmak, Anastasia Manokhina, Anna Shirshova, and Yuanyuan Pao. The algorithm works as follows: Sample \(m\) data sets \(D1,\dots,Dm\) from \(D\) with replacement. Introduction to Data Splitting Data into Training and Test sets Model 0: A Single Classification Tree Model 1: Bagging of ctrees Model 2: Random Forest for. Mlcourse.ai – Open Machine Learning CourseĪuthors: Vitaliy Radchenko, and Yury Kashnitsky. Random Forest One of the most famous and useful bagged algorithms is the Random Forest A Random Forest is essentially nothing else but bagged decision trees, with a slightly modified splitting criteria. Predicting the future with Facebook Prophet.Vowpal Wabbit: Learning with Gigabytes of Data.

Feature engineering & feature selection.It fits a tree for each bootsrap sample, and then aggregate the predicted values from all these different trees. Instead, the goal of Bagging is to improve prediction accuracy. It employs the idea of bootstrap but the purpose is not to study bias and standard errors of estimates. When logistic regression is good and when it is not Bagging stands for Bootstrap and Aggregating.An illustrative example of logistic regression regularization.Classification, Decision Trees & k Nearest Neighbors.

0 kommentar(er)

0 kommentar(er)